Research

My work involves the full pipeline of robot manipulation, so here you will find a wide amount of various things I do related to each module which allows Crichton to manipulate objects:

- Pouring in different locations (because it is not always the center!)

- Robot picks and place to a table, on top of a box and inside a box

- Robot pours

- Robot sees, robot recognizes

- Generalization: Robots galore!

- Efficient Manipulation Planning using Task Goals

- Grasping unknown objects using superquadrics approximation

- Path Planning for Redundant Robotic Arms

Side Project of the Semester (Spring 2015)

Various previous projects

Crichton pours from 3 objects into a bowl at different places on a table

| Tests with 3 different objects (white cup, Pringles and mustard). The objects start at 3 different locations and end up in 6 goal places for the bowl (showing only 4 examples per each due to lack of space). If for some reason this video does not play here, you can see the YouTube version here |

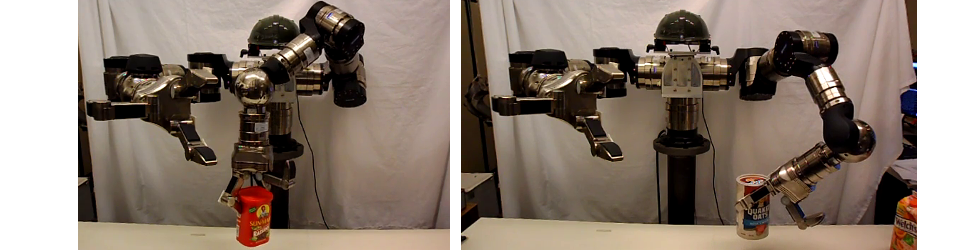

Crichton picking and placing objects on a table, on top of a box and inside a box!

| Tests with 10 objects (the video shows 4, I have to find the time to edit the remaining 6 other clips and upload them here!): Pringles container, milk, Cheezit and Raisins box. If for some reason this video does not play here, you can see the YouTube version here |

Crichton pours from 3 different objects!

| Tests with 3 objects: Pringles container, Soft scrub and Raisins box. If for some reason this video does not play here, you can see the YouTube version here. More description coming up soon! |

Crichton recognizing 42 objects!

| Humans manipulate objects everyday and unconsciously we use our experience to help us decide how to handle objects. While we do not have the exact information of every object (i.e. detailed 3D models), we certainly know "something" about most objects we face. For instance, if we see a cup we will likely try to grasp it from the side and keep it upright. This video shows the results of our teaching Crichton to recognize 42 different household objects (30 of them from the YCB dataset). By recognizing the objects, Crichton in turn can access some pre-stored information, such as a general description of the object and general information about affordances, which is useful for the subsequent step of manipulation planning. |

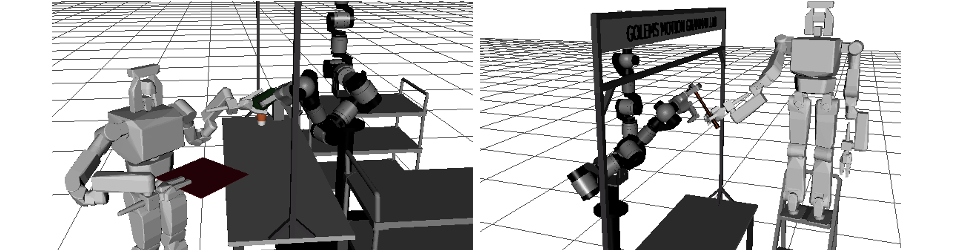

Hi Baxter!

| As much as I love Crichton, it is evident to me that he is not the only robot on Earth. We decided to test his pipeline on another platform and we got the great chance to paid a visit to Dr. Ben Amor at Arizona State University. Dr. Ben Amor happened to have a Baxter so we set to transfer some of Crichton manipulation code to the said Baxter (a.k.a. Cricthon Jr.) and we got some neat results. I was only there for the week so I am looking forward to have more time to play around with the red robot in the future. Besides the joy of working with another platform, it is worth noticing that the algorithms we design are hardware agnostic, hence in theory they should run in any robot, as was the case here. |

Efficient Manipulation Planning using Task Goals

| Our recent work submitted to IROS! In order to grasp an object you have to choose among potentially infinite possibilities, particularly if your arm is redundant. In this work, we investigate how to use the end-comfort effect to help us choose a solution quickly and effectively. |

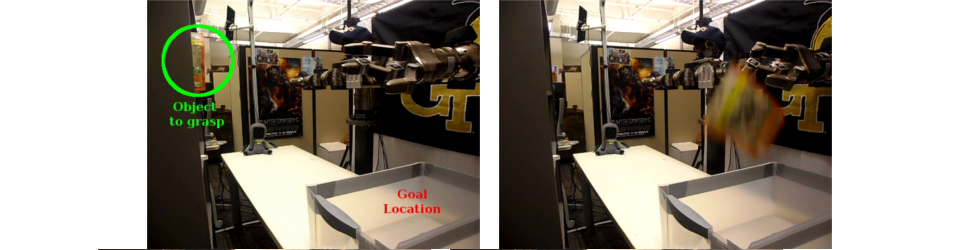

Grasping Unknown Objects using online Superquadrics Approximation

| I have to add more details here but in short: Does your robot have what it takes to grasp unknown objects in a fast and efficient manner? This work will be presented at ICRA 2015 in May |

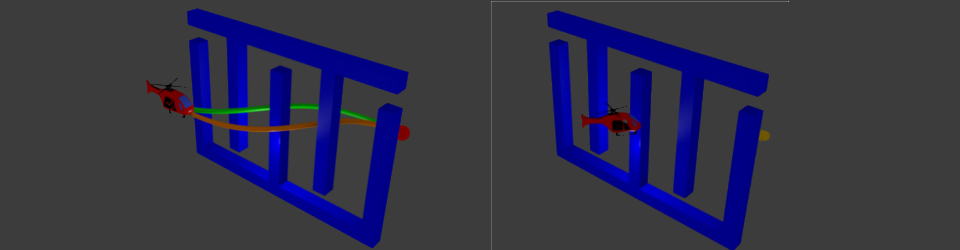

Path Planning for Redundant Robotic Arms

| Redundancy is a desired feature in robotic arms. Why? Well, if you have more degrees of freedom (DOF) than you need allows more than one possible way to accomplish a manipulation task. However, this flexibility also arises questions: Which of all alternatives should we choose? Is it possible to list all the possibilities so we can choose "the best"? I propose a determistic method to express these different alternatives by means of discretizing the nullspace of the arm and searching through it. By using diverse heuristic functions, we evaluate the configurations and choose the best depending on our requirements. |

The Robot vs the Cabinet

| Bin picking, although an inherently simple problem, is not that easy to solve for a robot with such long limb as ours (not to mention his freaking long fingers). Whenever I have free time, I go away from my benchmarking general manipulation problems and investigate how to efficiently generate plans for Crichton to become a future warehouse robot :0} |

Dynamic Signaling of Robot Teams under Human Supervision

| How many robots can a human operator reasonably supervise? While many people agree that the number depends on the task to be performed, it is natural that the greater the number of robots the more attention the human supervisor requires. In this project, we implement a mock environment in which 2 robots search for a red object. Once one of them finds it, the robot will approach it to verify the object's identity and then it will fire a visual dynamic signal (moving back and forth) so it catches the supervisor's attention. Simulation made with the Gazebo simulator coupled with ROS to access simulated Kinect and odometry data. |